How much chemistry to be useful?

Training with low chemical information.

- So, what are we going to do?

- How can we convert molecules to images?

- Load data

- Training our model

- Interpretation

- Exporting the model

- Fin

from rdkit.Chem import MolFromSmiles, MolToSmiles

from fastai.vision.all import *

from rdkit.Chem import AllChem

from rdkit import Chem

import numpy as np

import pandas as pd

import IPython.display

from PIL import Image

Hi! This is will be the first post from my new blog. I hope you guys enjoy it.

I'm doing the Fast.AI course and I decided to try solve some problems from my field of research (Cheminformatics) using the fastai package. In this blog, I will try to follow the chapters from the Deep Learning for Coders with fastai & Pytorch. For instance, this notebook was inspired by chapter 2 (the notebook version can be found here)

Let's begin!

In this notebook we will try to solve a quantitative structure-activity relationship QSAR problem. What does this mean? Well, QSAR is a major aspect of Cheminformatics. The goal of QSAR is to find useful information in chemical and biological data and use this information for inference on new data. For example, we might want to predict if a molecule is active on a particular biological target. We could start with a dataset of experimentally measured bioactivities (e.g. $IC_{50}$ values, inhibition constants etc) and train a model for bioactivity prediction. This model can be used to predict the bioactivity of other molecules of interest. By using QSAR, BigPharma and research groups can generate new hypothesis much faster and cheaper than testing a bunch of molecules in vitro.

Traditionally, machine learning methods such as random forest, support vector machines and gradient boosting dominate the field. That's because these classical methods usually give very good results for a range of datasets and are quite easy to train. Until recently, researchers did not apply deep learning in large scale to bioactivity prediction. When they did, it was usually in the form of fully connected neural with just 2-5 layers. However, the last 5 years saw a BOOM in the number of publications using deep learning in very interesting ways! For example, recurrent neural networks are being applied to generate molecules, convnets are showing SOTA performance on binding affinity and pose prediction and multi-task learning was used successfully to win a Kaggle competition for bioactivity prediction!

The most common type of data for QSAR is tabular. Researchers usually calculate many chemical features to describe a collection of molecules. As an example, one of the most common consists in a binary vector indicating the presence/absence of chemical groups in a molecule. We can then use this fingerprint to train a macihine learning model for bioactivity prediction.

In this notebook we will use a different strategy. Instead of calculating a bunch of vectors, we'll convert each molecule to an image and feed that input to a neural network! As Jeremy said in the book:

Another point to consider is that although your problem might not look like a computer vision problem, it might be possible with a little imagination to turn it into one. For instance, if what you are trying to classify are sounds, you might try converting the sounds into images of their acoustic waveforms and then training a model on those images.

Let's try that!

In reality, we are not going to convert molecules to images of molecules. What we actually need is a way to represent molecules the same way as images. That way consists of using arrays. For example, an image can be represented as 3D array of shape $(W, H, C)$, where $W$ is the width, $H$ is the height and $C$ is the number of channels. If we could do that to molecules, then it would be straightforward to use it as input to a model.

There are many ways to do that, but we are going to use one that I think is very interesting. In 2017, Garrett B. Goh, Charles Siegel, Abhinav Vishnu, Nathan O. Hodas and Nathan Baker published a preprint showing that machine learning models actually don't need to know much about chemistry or biology to make a prediction!

In their original manuscript, the authors called their model Chemception and showed that using very, very simple image-like inputs it was possible to achieve SOTA performance on some public datasets. That's quite an achievement! Until yesterday, the cheminformatics community was using handcrafted features and now it seems we don't even need to tell many things about molecules to train a predictive model!

As the author mentioned in the preprint:

In addition, it should be emphasized that no additional source of chemistry-inspired features or inputs, such as molecular descriptors or fingerprints were used in training the model. This means that Chemception was not explicitly provided with even the most basic chemical concepts like “valency” or “periodicity”.

This means that Chemception had to learn A LOT about chemistry from scratch, using only not very informative inputs (to humans, at least)!

I really find this amazing!

Just to clarify:I'm not saying the model would be useful in real settings. But it is quite amazing to see a good performance without using elaborate chemical descriptors.

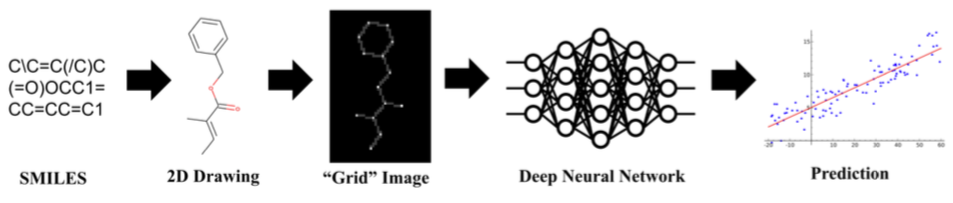

The Chemception model is a convolutional neural network for QSAR tasks. An overview of their method is shown below:

mols = pd.read_csv('/home/marcossantana/Documentos/GitHub/fiocruzcheminformatics/_data/fxa_ic50_processed.csv',sep=';')

mols.head(2)

The chemcepterize_mol function below will take care of converting the molecules SMILES strings (a one-line representation of the chemical structure) to the image-like format that we want.

Our workflow will go like this: first we define an embedding dimension and a resolution. You can think of this is a black canvas where the structures will be plotted and each atom will have a resolution consisting of how many pixels will be used to represent it. The dimensions of the canvas will be given by:

$$DIM = \frac{EMBED*2}{RES}$$

where $EMBED$ is the embedding size and $RES$ the resolution

The next step consists of calculating some basic chemical information from the structure, such as bond order, charges, atomic numbers and the hybridization states. This information will be converted into a matrix of shape $(P, DIM, DIM)$, where $P$ is the number of properties or channels in the image (in this case we will use 3) and $DIM$ is the dimension of the canvas.

The properties we will calculated are shown in the figure below from the original manuscript.

![]()

def chemcepterize_mol(mol, embed=20.0, res=0.5):

dims = int(embed*2/res)

#print(dims)

#print(mol)

#print(",,,,,,,,,,,,,,,,,,,,,,")

cmol = Chem.Mol(mol.ToBinary())

#print(cmol)

#print(",,,,,,,,,,,,,,,,,,,,,,")

cmol.ComputeGasteigerCharges()

AllChem.Compute2DCoords(cmol)

coords = cmol.GetConformer(0).GetPositions()

#print(coords)

#print(",,,,,,,,,,,,,,,,,,,,,,")

vect = np.zeros((dims+2,dims+2,4)) # I added 2 pixels on to height and width because this function sometimes does not work if the molecule is too big.

#Bonds first

for i,bond in enumerate(mol.GetBonds()):

bondorder = bond.GetBondTypeAsDouble()

bidx = bond.GetBeginAtomIdx()

eidx = bond.GetEndAtomIdx()

bcoords = coords[bidx]

ecoords = coords[eidx]

frac = np.linspace(0,1,int(1/res*2)) #

for f in frac:

c = (f*bcoords + (1-f)*ecoords)

idx = int(round((c[0] + embed)/res))

idy = int(round((c[1]+ embed)/res))

#Save in the vector first channel

vect[ idx , idy ,0] = bondorder

#Atom Layers

for i,atom in enumerate(cmol.GetAtoms()):

idx = int(round((coords[i][0] + embed)/res))

idy = int(round((coords[i][1]+ embed)/res))

#Atomic number

vect[ idx , idy, 1] = atom.GetAtomicNum()

#Hybridization

hyptype = atom.GetHybridization().real

vect[ idx , idy, 2] = hyptype

#Gasteiger Charges

charge = atom.GetProp("_GasteigerCharge")

vect[ idx , idy, 3] = charge

return Tensor(vect[:, :, :3].T) # We will omit the last dimension just to fit our fastai models. But you can also adapt the architeture to deal with 4 or more channels.

First, we will create a column called mol that maps our molecular structures to rdkit.Chem.rdchem.Mol objects. This is essential because we are going to use Rdkit to calculate everything and rdkit.Chem.rdchem.Mol has a bunch of nice functionalities to work with molecular graphs.

mol= MolFromSmiles('c1ccccc1') # A rdkit.Chem.rdchem.Mol object representing benzene

type(mol)

mols['mol'] = mols['processed_smiles'].apply(MolFromSmiles)

Now we are going to vectorize our molecules and transform them to image-like matrices.But first, let's test our function.

def vectorize(mol, embed, res):

return chemcepterize_mol(mol, embed=embed, res=res)

v = vectorize(mols["mol"][0],embed=56, res=0.5).T.numpy()

Ok, now let's see what that does!

As you can see, the image is mostly black space and the molecule is just a tiny, tiny part of it (shown in red). The black spaces have no chemical information at all! That's why the authors said Chemception had to learn everything from scratch!

plt.show(print(v.shape))

plt.imshow(v)

We can make bigger molecules by reducing the embedding size. But beware that will also reduce the total image size.

larger_img = vectorize(mols["mol"][0],embed=16, res=0.5).T.numpy()

plt.show(print((larger_img.shape)))

plt.imshow(larger_img)

mols["molimage"] = mols["mol"].apply(partial(vectorize, embed=32, res=0.25))

plt.imshow(mols["molimage"][0].T.numpy())

Now that we know how to convert the molecules to the desired format, we are ready to train a model using fastai! In order to do that, we need to define three things

First, let's define how to split our data into training and validation sets. Luckly, our dataset comes with a column called "is_valid" showing which molecules should be used for validation and training. Therefore, we will use the ColSplitter class from fastai to get the indeces. We could also do a random split here or any kind at all. Fastai is very flexible!

splits = ColSplitter('is_valid')(mols)

splits

We need to tell fastai how to get the items that we'll feed to our model. In this case, we will use the images we created and stored in the "molimage" column of our dataframe.

x_tfms = ColReader('molimage')

Now we need to tell fastai where are our targets. In this case, our targets are in the column "act", showing the bioactivity of each molecule. We will also tell fastai to treat the values of this column as categories, which will be used to train a classification model.

y_tfms = [ColReader('act'),Categorize]

The fastai book uses the DataBlock functionality to create the dataloaders at Chapter 2 and Jeremy says that we actually need four things

- What kind of data to work with;

- How to get the items;

- How to label these items;

- How to create a validation set.

But since we are using a custom data type, we'll skip the step defining the kind of data.

mol_dataset = Datasets(mols,[x_tfms,y_tfms], splits=splits)

x,y = mol_dataset[0]

x.shape,y

dls = mol_dataset.dataloaders(batch_size=8)

Let's inspect one batch to see if everything is already:

x,y = dls.one_batch()

x,y

It seems everything is in order. Alright! Let's train this beast!

metrics = [Recall(pos_label=1),Recall(pos_label = 0), Precision(pos_label = 0), MatthewsCorrCoef()]

learn = cnn_learner(dls, resnet18, metrics=metrics,ps=0.25)

learn.fine_tune(10)

It seems everything went pretty well! In addition, the Matthew's correlation coefficient is quite decent (~0.46).

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

The performance is pretty decent, if without any kind of data augmentation and hyperparameter optimization.

In the original paper, the authors used random rotations of the images. Why is that? Well, since most of the image is empty space, if we distort it even a little bit, by cropping or squishing, it might completly change the molecule represented. Rotating the image is a solution to the data augmentation problem because in this particular case it won't change the meaning of our images.

dls = mol_dataset.dataloaders(batch_size=8,after_batch=Rotate(max_deg=180))

learn = cnn_learner(dls, resnet18, metrics=metrics,ps=0.25)

learn.fine_tune(10)

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

Now that we trained the model, we can export it and use it for inference.

learn.export()

learn_inf = load_learner('export.pkl')

learn_inf.predict(mols['molimage'][2])